- 1.Transformers replace recurrent networks with self-attention, enabling parallel processing and handling long sequences efficiently

- 2.Multi-head attention allows the model to focus on different aspects of input simultaneously, like syntax and semantics

- 3.Positional encoding solves the position problem without sequential processing, maintaining word order information

- 4.The encoder-decoder architecture powers everything from GPT (decoder-only) to BERT (encoder-only) to T5 (full transformer)

10x

Training Speedup

2M+

Context Length

175B+

Parameter Count

What are Transformers? The Revolution in AI Architecture

Transformers are a neural network architecture introduced by Google researchers in the groundbreaking 2017 paper Attention Is All You Need. They replaced recurrent neural networks (RNNs) and convolutional neural networks (CNNs) as the dominant architecture for natural language processing tasks.

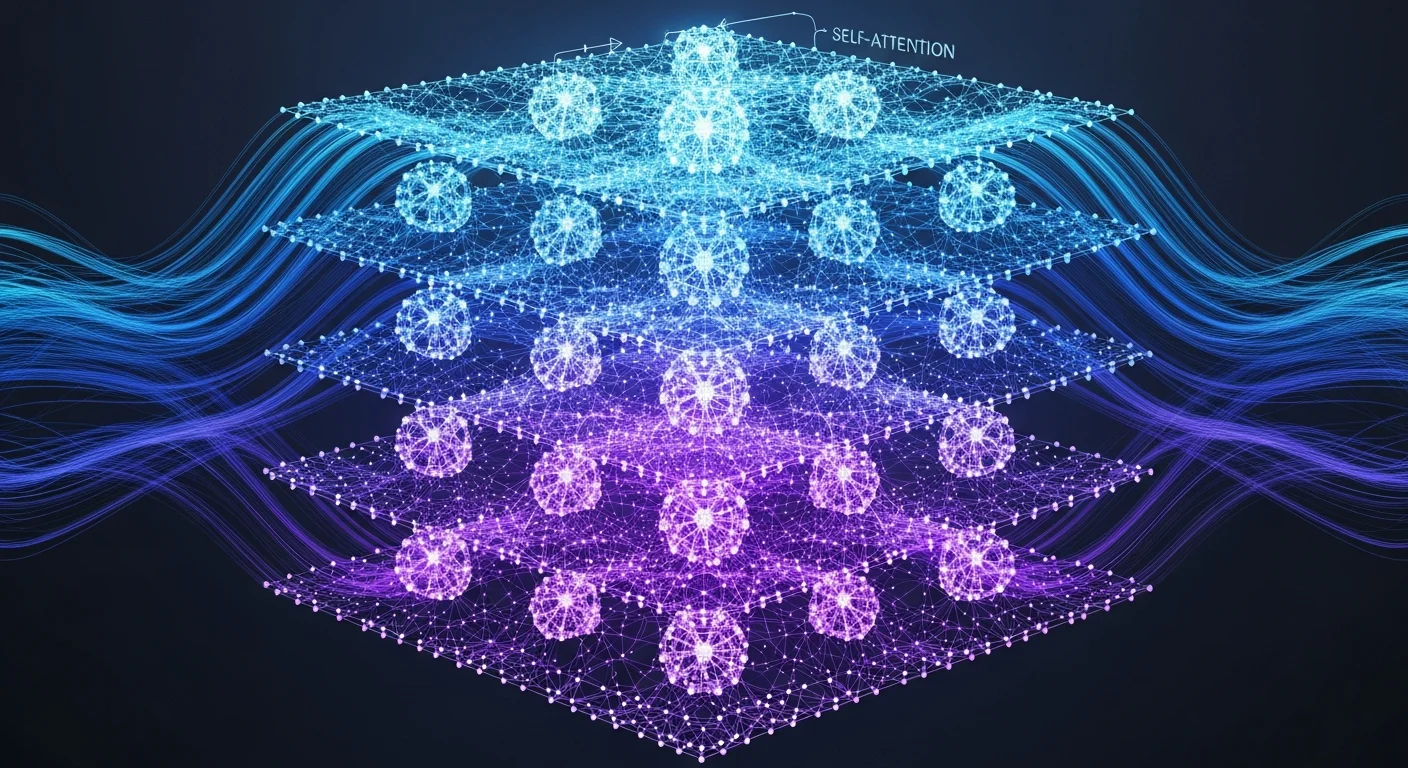

The key innovation is self-attention, which allows the model to weigh the importance of different words in a sentence when processing each word. Unlike RNNs that process sequences word-by-word, transformers can process all words simultaneously, dramatically improving training speed and enabling longer context windows.

Every major language model today uses transformer architecture: GPT-4, Claude, Gemini, and LLaMA are all transformer-based. This architecture powers everything from chatbots to code generation and has enabled the current AI revolution.

Source: Vaswani et al., 2017

How Attention Mechanisms Work: The Math Behind the Magic

Attention mechanisms solve a fundamental problem: how can a model focus on relevant parts of the input when processing each element? The transformer uses scaled dot-product attention with three key components:

- Queries (Q): What information are we looking for?

- Keys (K): What information is available in each position?

- Values (V): The actual information content at each position

The attention mechanism computes attention scores by taking the dot product of queries and keys, scaling by the square root of the key dimension, and applying softmax to get attention weights. These weights determine how much each value contributes to the output.

The Attention Formula Explained

The core attention formula that powers all transformers:

import torch

import torch.nn.functional as F

def scaled_dot_product_attention(Q, K, V):

# Q, K, V are matrices of shape (batch_size, seq_len, d_model)

d_k = Q.size(-1) # dimension of keys

# Compute attention scores

scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(d_k)

# Apply softmax to get attention weights

attention_weights = F.softmax(scores, dim=-1)

# Apply weights to values

output = torch.matmul(attention_weights, V)

return output, attention_weightsThe scaling factor (√d_k) prevents the dot products from becoming too large, which would push the softmax function into regions with extremely small gradients, making training difficult.

Multi-Head Attention: Parallel Processing of Different Relationships

Single attention heads can only capture one type of relationship at a time. Multi-head attention runs multiple attention mechanisms in parallel, each learning different aspects of the input relationships.

For example, in the sentence 'The cat sat on the mat', different attention heads might focus on:

- Syntactic relationships: Subject-verb connections (cat → sat)

- Semantic relationships: Object associations (cat → mat)

- Positional relationships: Spatial prepositions (sat → on)

- Contextual relationships: Overall sentence meaning

GPT-3 uses 96 attention heads across 96 layers, creating an incredibly rich representation space. This parallel processing of different relationship types enables the nuanced understanding that makes modern AI agents so effective.

Positional Encoding: Teaching Order Without Sequence

Transformers process all positions simultaneously, which is great for speed but creates a problem: how does the model know word order? 'Cat chased dog' and 'Dog chased cat' have the same words but opposite meanings.

Positional encoding solves this by adding position information to each word's embedding. The original transformer uses sinusoidal functions that create unique patterns for each position:

import numpy as np

def positional_encoding(seq_len, d_model):

pos = np.arange(seq_len)[:, np.newaxis]

div_term = np.exp(np.arange(0, d_model, 2) * -(np.log(10000.0) / d_model))

pe = np.zeros((seq_len, d_model))

pe[:, 0::2] = np.sin(pos * div_term) # Even dimensions

pe[:, 1::2] = np.cos(pos * div_term) # Odd dimensions

return peModern models like GPT use learned positional embeddings instead of fixed sinusoidal patterns, but the principle remains: adding position information allows parallel processing while preserving order.

Encoder-Decoder Architecture: The Full Transformer Structure

The original transformer has two main components working together:

- Encoder: Processes the input sequence and creates rich representations

- Decoder: Generates output sequences using encoder representations and previous outputs

Each encoder layer contains multi-head attention followed by a feed-forward network, with residual connections and layer normalization. The decoder adds a third component: cross-attention that attends to encoder outputs.

This full architecture was designed for sequence-to-sequence tasks like translation, but most modern applications use simplified versions optimized for specific tasks.

Transformer Variants: From BERT to GPT to T5

Different AI applications use different parts of the transformer architecture:

Uses only the decoder stack for autoregressive text generation. Predicts next word given previous context.

Key Skills

Common Jobs

- • AI Engineer

- • NLP Engineer

Uses only the encoder stack with bidirectional attention. Excellent for understanding and classification tasks.

Key Skills

Common Jobs

- • ML Engineer

- • Data Scientist

Uses complete encoder-decoder architecture. Treats all NLP tasks as text-to-text generation problems.

Key Skills

Common Jobs

- • Research Scientist

- • ML Engineer

GPT-style (Decoder)

Generate text sequentially

BERT-style (Encoder)

Understand full context

Building a Transformer: Implementation Guide

Understanding transformer implementation helps you debug models, optimize performance, and build custom architectures. Here's a simplified transformer block:

Transformer Block Implementation

import torch

import torch.nn as nn

class TransformerBlock(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout=0.1):

super().__init__()

self.attention = nn.MultiheadAttention(d_model, num_heads, dropout)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

# Feed-forward network

self.ff = nn.Sequential(

nn.Linear(d_model, d_ff),

nn.ReLU(),

nn.Linear(d_ff, d_model),

nn.Dropout(dropout)

)

def forward(self, x):

# Multi-head attention with residual connection

attn_out, _ = self.attention(x, x, x)

x = self.norm1(x + attn_out)

# Feed-forward with residual connection

ff_out = self.ff(x)

x = self.norm2(x + ff_out)

return xThis simplified implementation shows the key components: multi-head attention, feed-forward layers, residual connections, and layer normalization. Production models like GPT add optimizations like attention mechanisms and specialized initialization schemes.

Building Your First Transformer Model

1. Start with Pre-trained Models

Use HuggingFace transformers library to load pre-trained models like BERT or GPT-2. Understand the architecture before building from scratch.

2. Implement Attention Mechanism

Build scaled dot-product attention to understand the core mechanism. Start with single-head before moving to multi-head.

3. Add Positional Encoding

Implement sinusoidal or learned positional embeddings. Test with and without to see the importance of position information.

4. Build Complete Block

Combine attention, feed-forward, residual connections, and layer normalization into a complete transformer block.

5. Stack and Train

Stack multiple blocks and train on a simple task like next-word prediction to verify your implementation works correctly.

Transformers FAQ

Related Tech Articles

Related Degree Programs

Career Resources

Taylor Rupe

Full-Stack Developer (B.S. Computer Science, B.A. Psychology)

Taylor combines formal training in computer science with a background in human behavior to evaluate complex search, AI, and data-driven topics. His technical review ensures each article reflects current best practices in semantic search, AI systems, and web technology.